Liquid cooling: a cool approach for AI

HPE’s cooling expert, Jason Zeiler, explains why liquid cooling is ideally suited to cool next-generation accelerators for greater efficiency, sustainability, and density in future AI data centers

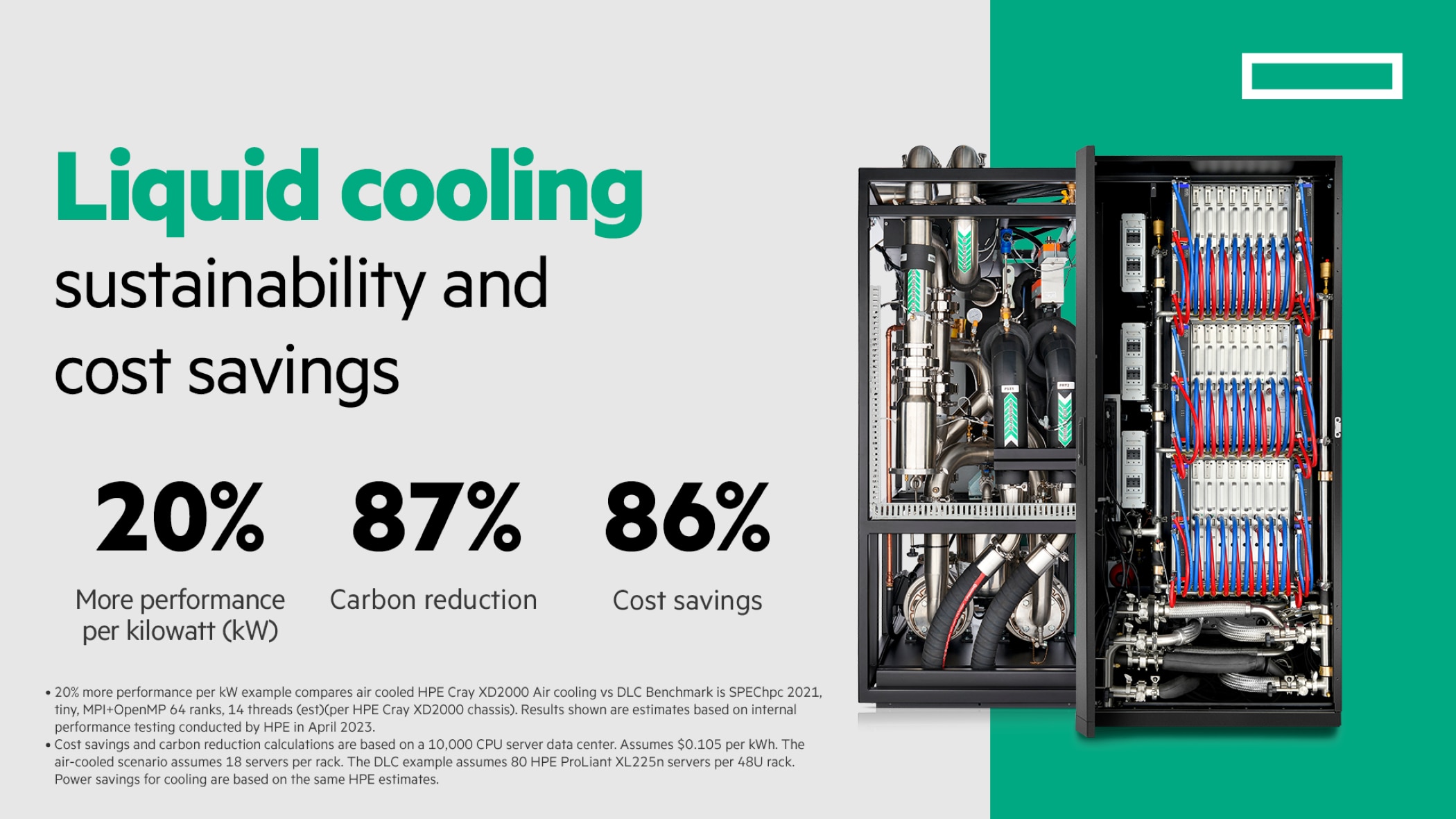

- Next-generation accelerators will require more efficient cooling that traditional air-cooling cannot effectively address

- Liquid cooling efficiently cools compute-intensive systems while reducing carbon footprint by 87% and costs by 86% annually

- Organizations worldwide already reuse, or plan to reuse, recovered heated water from liquid-cooling for other energy needs

AI is one of the most compute-intensive workloads of our time. It’s no surprise that power consumption and the associated energy cost of AI systems will go up.

Earlier this year, the International Energy Agency (IEA) reported that data centers, globally, used 2% of all electricity in 2022 and the IEA predicts that could more than double by 2026.1

While efficiency has improved in next-generation accelerators, power consumption will only intensify with AI adoption.

Data centers will need to run AI workloads more efficiently, and today’s facilities are not equipped to support the cooling demands of growing processor power.

That’s where liquid cooling comes in.

Staying cool in the age of AI

Compared to traditional air-cooling that uses fans, with liquid cooling –and specifically with direct liquid cooling – coolant is pumped directly into a server to absorb heat emitted by processors and transferred to a heat exchange system outside a data center.

At HPE, we have decades of experience innovating and delivering liquid-cooled systems worldwide to efficiently cool large-scale systems running high-performance computing (HPC) workloads.

Future AI infrastructure using the latest accelerators will require this same liquid cooling innovation to address concerns in power efficiency, sustainability, and even system resiliency that is core to keeping AI workloads running.

Let’s dig into the four main reasons why liquid cooling is the ideal solution for AI data centers.

Some chips just can’t take the heat

Our industry friends have done an amazing job engineering next-generation accelerators to bring us incredibly higher performance for AI with increased efficiency.

New chips are designed to pack even more performance in a small footprint, but that also means it will become difficult to cool all those critical components inside.

If we can’t cool down chips fast enough, data centers could face overheating issues that will cause system failure, and ultimately, unplanned downtime on running AI jobs.

Liquid cooling can cool down these chips faster and more efficiently as water contains three times more heat capacity than air2, allowing it to absorb more heat emitted by accelerators and other components such as CPUs, memory, and networking switches.

Realizing the value of AI, with less environmental impact

Efficiently cooling next-generation accelerators for system reliability is a key priority, but just as important, we owe it to Mother Nature to do it more sustainably.

Liquid cooling has significant sustainability and cost advantages for next-generation accelerators.

Let’s look at an HPC data center with 10,000 servers as an example.

If all 10,000 servers are air-cooled, a data center will emit more than 8,700 tons of CO2, compared to liquid-cooled servers that emit around 1,200 tons of CO2 a year 3 That’s an energy reduction of 87% and prevents nearly 17.8 million pounds of CO2 from being released annually into the atmosphere.3

Without surprise, this massive power reduction comes with huge cost savings. Any chief financial officer monitoring energy spending will appreciate that.

With a 10,000 liquid-cooled server data center, a data center will only pay $45.99 per server annually, compared to the annual cost of $254.70 per air-cooled server. That saves nearly $2.1 million annually in operating costs.3

Reusing energy from AI systems

The wonders don’t just stop there. Liquid cooling is the gift that keeps on giving.

After capturing heat, liquid-cooled systems transfer heat to an exchange system outside a data center where the heated water can be reused as an energy source to power other buildings or facilities.

The U.S. Department of Energy’s National Renewable Energy Laboratory (NREL) has successfully been doing this for years. The lab, which is one of the world’s leading renewable energy centers, reused 90% of heated water captured from its Peregrine system, an HPE Cray liquid-cooled supercomputer, as the primary heat source for its Energy Systems Integration Facility (ESIF) offices and laboratory space.

Our friends at QScale in Quebec are already planning to do this too, but to help grow produce and address food scarcity. Using liquid cooling, QScale hopes to power local greenhouses, nearly the size of 100 football fields, to produce an equivalent of 80,000 tons of tomatoes a year.

Similarly, in Norway, our partner Green Mountain plans to deliver its heated water to support fish farming efforts at Hima, the world’s largest land-based trout farm, based on Recirculating Aquaculture Systems (RAS), a technology that recirculates pure, clean mountain water. Hima aims to produce about 8,000 tons of premium Hima® Trout, equivalent to 22,000,000 dinners per year.

More AI performance, smaller systems

As data centers plan and prepare to adopt future AI infrastructures, density will play a key factor in making room for advanced AI solutions.

Since fans and all the equipment needed to support them in air-cooled solutions aren’t necessary for liquid cooling, data centers can place fewer, more tightly packed server racks to maximize space, or expand as needed.

Using the 10,000 server data center example, with liquid-cooled servers, a facility reduces 77.5% of space needed.3

Additionally, over five years, liquid-cooled solutions use 14.9% less chassis power, offering 20.7% higher performance per kW than air-cooled solutions.4

AI requires experience and trust

When it comes to AI, it pays to trust the experts. At HPE, we have over 50 years of experience and over 300 patents in liquid cooling.

We continue to build large, liquid-cooled systems for our customers to operate for several consecutive years without any issues. Our liquid-cooled solutions have also been proven to contribute to more sustainable computing.

In just the past two years, we delivered four of the world’s top ten fastest systems, all of which are HPE Cray EX liquid-cooled supercomputers.5

Of these, Frontier, the world’s number one fastest supercomputer for the U.S. Department of Energy’s Oak Ridge National Laboratory, achieved an engineering feat in breaking the exascale speed barrier, running tens of thousands of accelerators without failure. Even at its monumental performance scale, Frontier still achieved the title of the world’s number one energy-efficient supercomputer.6

So, we know a thing or two about what it takes to build and efficiently operate compute-intensive systems.

We have been long prepared for AI and are ready to continue supporting customers to power their AI journey with the most sophisticated cooling solutions in the world.